Common Agentic Patterns in LangGraph

When building AI agents with Large Language Models (LLMs), there are several proven patterns that help create more intelligent and reliable systems. LangGraph provides excellent tools to implement these patterns. Let's explore the most common agentic workflows that you can use to build powerful AI applications.

When building AI agents with Large Language Models (LLMs), there are several proven patterns that help create more intelligent and reliable systems. LangGraph provides excellent tools to implement these patterns. Let's explore the most common agentic workflows that you can use to build powerful AI applications.

What Are Agentic Patterns?

Agentic patterns are design approaches that make AI systems more autonomous and capable. Instead of simple input-output operations, these patterns enable AI agents to think, plan, correct themselves, and work collaboratively to solve complex problems.

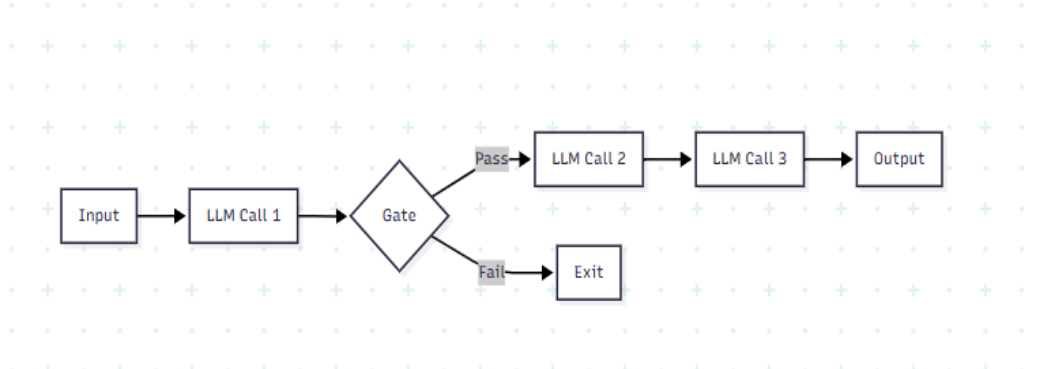

1. Prompt Chaining

What it is: Prompt chaining connects multiple LLM calls in a sequence, where each call builds upon the previous one's output.

How it works:

- Input goes to the first LLM call

- Output from LLM Call 1 becomes input for LLM Call 2

- This continues until you get the final result

- Each step can perform different tasks (analysis, creation, refinement)

Use cases:

- Multi-step document analysis (topic (LLM 1) → outline (LLM 2) → detailed report (LLM 3) )

- Complex reasoning tasks that benefit from breaking down into smaller steps

- Content creation workflows

Example workflow:

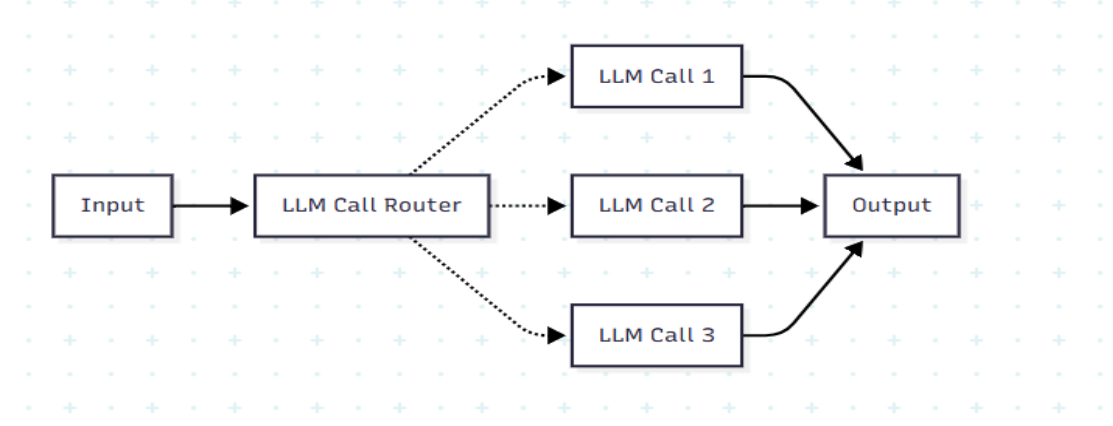

2. Routing

What it is: Routing directs different types of inputs to specialized LLM calls based on the content or context.

How it works:

- Input comes to an LLM Call Router

- The router analyzes the input and decides which specialized LLM to use

- Different LLM calls handle different types of queries:

- Query-related input: Technical questions, research queries

- Sales-related input: Product inquiries, pricing questions

- The appropriate LLM processes the request and returns the output

Use cases:

- Customer service chatbots that handle different departments

- Multi-domain question answering systems

- Content classification and processing

Example workflow:

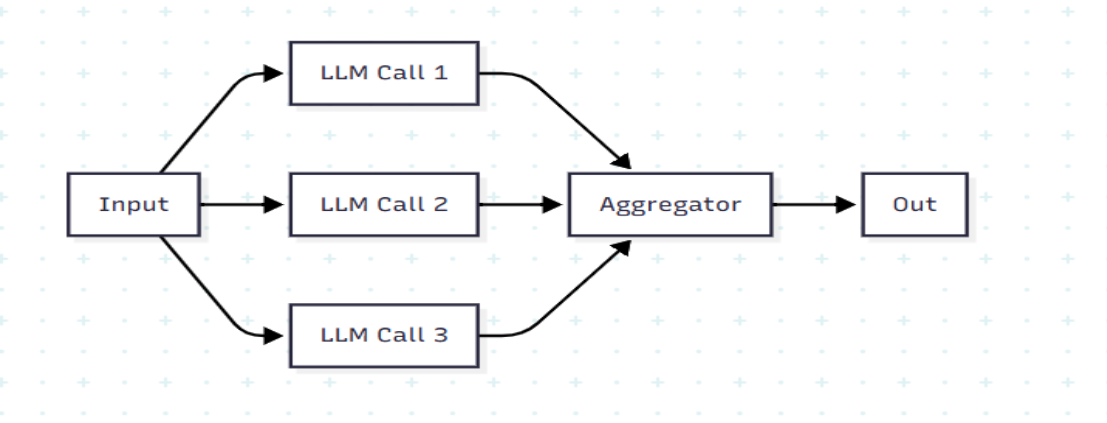

3. Parallelization

What it is: Parallelization runs multiple LLM calls simultaneously on the same input, then combines their outputs.

How it works:

- Single input is sent to multiple LLM calls at the same time

- Each LLM call processes the input independently

- An aggregator combines all outputs into a final result

- Often includes content moderation to ensure quality

Use cases:

- Content moderation systems

- Multi-perspective analysis

- Consensus-based decision making

- Quality assurance processes

Simple Example with Scenario:

When a user uploads content to YouTube, the platform's content moderation system analyzes the video or image input from multiple perspectives:

- Community Guidelines Check – Verifies whether the content complies with YouTube's community standards.

- Misinformation Detection – Assesses whether any false or misleading information is present.

- Harmful Content Detection – Identifies the presence of any harmful or inappropriate material.

These checks are performed in parallel. Each one returns a score or result. Based on the combined outcomes of these evaluations, YouTube decides whether to allow the content to be published or not.

Example workflow:

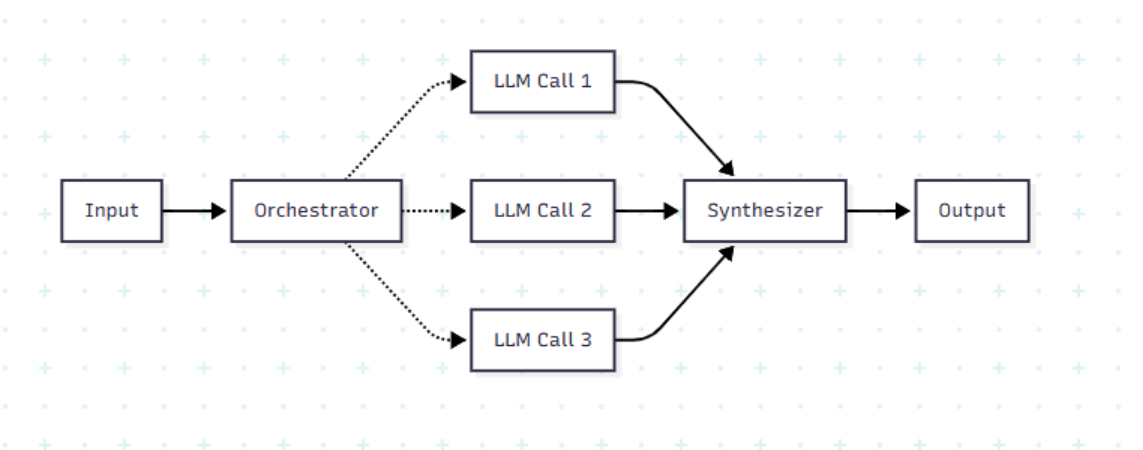

4. Orchestrator Workers (Similar to Parallelization Workflow)

What it is: An orchestrator manages and coordinates multiple worker LLMs, similar to parallelization, but with intelligent control—it knows the status of each worker, can trigger retries for failed or uncertain results, and dynamically adjusts the flow based on intermediate outcomes, making decisions in a more context-aware and adaptive manner.

How it works:

- Input goes to an Orchestrator

- Orchestrator distributes work to multiple LLM workers

- Workers process their assigned tasks

- Synthesizer combines all worker outputs

- Final output is delivered

Use cases:

- Complex project management

- Distributed problem solving

- Multi-agent collaboration systems

Example workflow:

Simple Example with Scenario:

Let’s reuse the example used in the Parallelization flow — the Content Moderation System. If we now include the following conditions, it transforms into an Orchestrator workflow:

- If any check fails (e.g., a high score for harmful content), the orchestrator triggers a retry using a different moderation model or escalates the content to a manual review agent.

- If all scores are acceptable after evaluation or retries, the content is approved and published.

This demonstrates that the orchestrator has control over the tasks performed by all the workers, enabling it to coordinate and manage the flow in an agentic, intelligent manner.

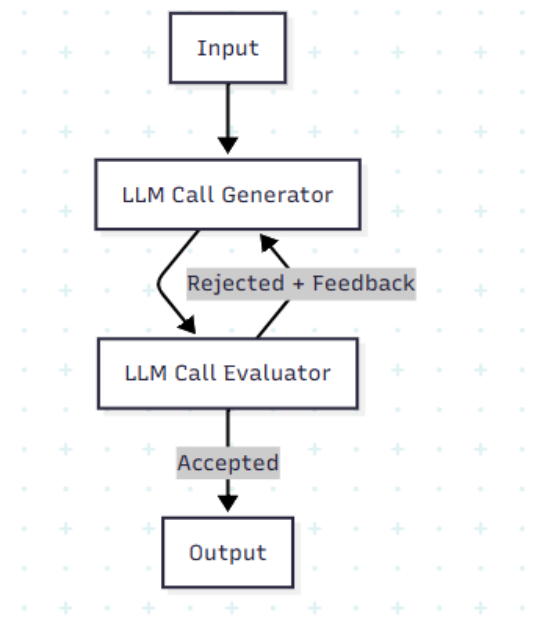

5. Evaluator Optimizer

What it is: A self-improving system that learns from its attempts and gets better over time.

The Challenge: "You will be given a task, but you know that in a single attempt, we cannot able to complete the task."

How it works:

- Input goes to LLM Call Generator

- Generator creates a solution

- LLM Call Evaluator reviews the solution

- If rejected, feedback goes back to the generator

- Process repeats until the solution is accepted

- Final accepted output is delivered

Use cases:

- Email draft refinement

- Blog post writing and editing

- Poem writing with iterative improvement

- Any creative task that needs iteration to improve

Example workflow:

Simple Example with Scenario:

Blog Generation using a feedback agent flow, the user first provides a topic. The blog generator agent creates a draft, which is then reviewed by a feedback agent for quality, clarity, and structure. If the feedback score is low, the generator retries and improves the content based on suggestions. This loop continues until the content meets the required standard. Finally, the approved blog is delivered or published.

Implementation Tips

- Start simple: Begin with prompt chaining and add complexity as needed.

- Monitor performance: Track which patterns work best for your specific use cases.

- Combine patterns: You can mix these patterns. For example, use routing to direct queries to specialized evaluator-optimiser systems.

- Handle failures gracefully: Always include fallback mechanisms when LLM calls fail.

- Optimize for your domain: Customize these patterns based on your specific requirements and constraints.

Conclusion

These agentic patterns in LangGraph provide powerful ways to build sophisticated AI systems. By understanding when and how to use each pattern, you can create agents that are more reliable, intelligent, and capable of handling complex real-world tasks.

Remember, the key is to match the pattern to your specific needs. Start with simpler patterns and gradually incorporate more complex ones as your requirements grow. With these tools, you can build AI agents that truly act as intelligent assistants rather than simple question-answering systems.